Reclaiming My Digital Sovereignty, Part 1 - VPS Trials and Container Tribulations

Where I am increasingly skeptical about using US-based providers, find a European VPS provider, test out some initial hosting architecture but decide against it, and other ramblings…

After the outcome of the recent US elections and the ass-kissing of Big TechTM and stories of Oracle cloud deleting user accounts for no reason I decided it was time to move some of my web-presence from the various US-based entities I use back to my own control, and giving preference to using European companies where possible.

Some of the things I want to move:

- static sites (GitHub pages, Backblaze B2 + Cloudflare

- dynamic sites (all over the place)

- code hosting (GitHub)

- my Mastodon instance (Oracle Cloud)

- Domain registrar (Cloudflare Registrar)

- DNS hosting (Cloudflare)

- Reverse tunnels (Cloudflare Tunnels)

- Object storage (Backblaze)

- Random hosted solutions (UptimeRobot, Healthchecks, …)

VPS Hosting

The first thing to pick was a hoster: after some searching I came across Netcup (affiliate link), a hoster based out of Germany, which regularly has deals on their hosting packages. I picked up one of those deals - a VPS 1000 G11 SE - 4 core x86, 8GB RAM, 512GB storage. It’s hosted in Nürnberg.

Bonus of Netcup: You can upload your own disk images or DVD images to use in the server control panel. This allows you to install whatever OS you want, as long as it fits on the architecture (x86_64).

Which Linux distribution?

So, I had a box. Now, what to run on it? I had the initial idea to go with a fully containerised setup - not going the kubernetes route as I only have one box (k8s is a great ecosystem, but heavily overused IMHO), staying with Docker/Podman.

I quickly found openSUSE MicroOS, an immutable linux distribution that is meant for running container workloads. The immutable part being something I haven’t really dealt with in Linux. Added bonus was that this uses podman.

The key to adding packages to the base OS is the command transactional-update pkg install <pkg> and rebooting.

Ready, get set, go!

So, I now had a box, with a Linux distribution, and a container runtime. Since I wanted to keep things simple I decided to see how far I’d get with podman-compose, a podman replacement for docker compose.

Where are my containers at?! (after a reboot)

podman-compose isn’t quite like docker compose, which is also due to the different nature of podman vs docker. It has no central daemon which is used to spawn all your containers at boot time - so while testing out my first containers, I noticed they were gone when the box rebooted after downloading updates. Luckily this is easily solved:

podman-compose systemd -a create-unit

podman-compose -f your-container-file.yaml systemd -a register

systemctl --user enable --now podman-compose@your-container-file

What… is your favourite color source IP?

Another thing I noticed was that my ingress reverse-proxy Traefik was not seeing the correct source IP - it was just showing the internal IP address - which is also due to the non-root way podman works.

A solution for this is to use podman socket activation with Traefik. I found a handy tutorial by Erik Sjölund. In short:

Create the necessary systemd socket files:

For HTTP: /etc/systemd/system/http.socket:

[Socket]

ListenStream=80

FileDescriptorName=http

Service=traefik.service

[Install]

WantedBy=sockets.target

For HTTPS: /etc/systemd/system/https.socket

[Socket]

ListenStream=443

FileDescriptorName=https

Service=traefik.service

[Install]

WantedBy=sockets.target

You also need to start Traefik through systemd (by using Quadlets):

/etc/systemd/system/traefik.service

[Unit]

After=http.socket https.socket

Requires=http.socket https.socket

[Service]

Sockets=http.socket https.socket

[Container]

Image=docker.io/library/traefik

Exec=--entrypoints.web --entrypoints.websecure --providers.docker --providers.docker.exposedbydefault=false

Network=mynet.network

Notify=true

Volume=%t/podman/podman.sock:/var/run/docker.sock

Quadlet, why you so slow?!

Next up I was noticing that it took forever to start a container using a quadlet. Strangely enough, I’ve never had this on the Fedora box on which I run containers in my homelab.

Searching some more pointed me to a known issue, where systemd will wait for the network-online target to be active, which actually never happens for user sessions due to a dependency issue. I invite you to read the issue (and linked issues), but in the end I solved this by adding an additional systemd service:

/etc/systemd/system/podman-network-online-dummy.service

[Unit]

Description=This service simply activates network-online.target

After=network-online.target

Wants=network-online.target

[Service]

ExecStart=/usr/bin/echo Activating network-online.target

[Install]

WantedBy=multi-user.target

and by enabling it through systemctl enable --now podman-network-online-dummy

Obscure IP problems… but only with dhcp?

The final hurdle I bumped into was that for some obscure reason, my Traefik container would stop serving LetsEncrypt certificates, and only offer the built-in self-signed one. After quite some searching I found out that there’s some weird issue with the network activation - the container would start up before NetworkManager had gotten an IP from the DHCP server. Statically defining the IP address fixed the issue, or adding a startup delay in the container quadlets. I filed issue #25656, and as a workaround set it to static configuration.

Architecture

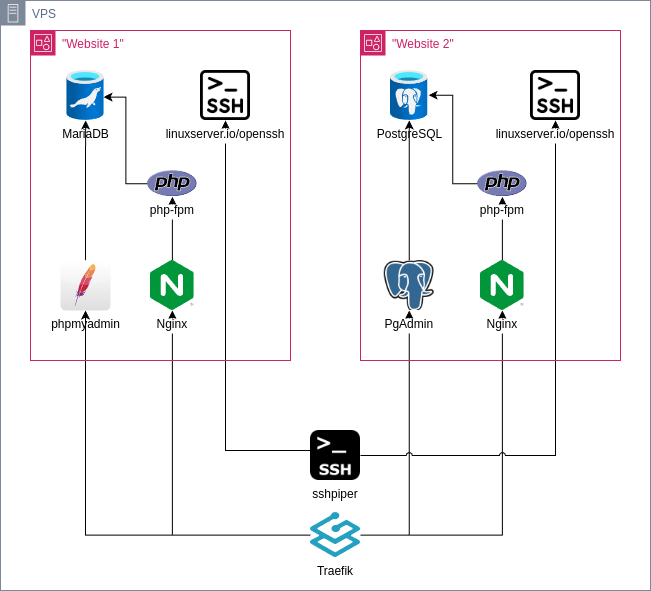

The architecture I had in mind was:

The outer (edge) layer would be comprised of:

Each website hosting would have its own set of containers:

- Nginx as a webserver

- php-fpm for the PHP runtime

- MariaDB together with phpMyAdmin and mysql-backup or

- PostgreSQL with PgAdmin

- linuxserver.io openssh

Outer (edge) layer

Traefik - web requests

I’ve been using Traefik for a while in my own homelab, so I also wanted to use it on the internet. The idea is that you just assign the necessary labels to your containers, and Traefik will do the rest. This worked fine most of the time, there being some hickups when dealing with selfsigned certificates and so on, but that’s all fairly well documented on the internet.

sshpiper - ssh reverse proxy

Another thing I wanted - which is common in the shared webhosting space - is a way to give other people besides me a way to upload files. I didn’t want to grant them access to the underlying system, just to “their” webspace. Ideally using an encrypted protocol like SSH.

Enter sshpiper - an SSH reverse proxy. You SSH into sshpiper, with a username and key, and it’ll proxy the connection towards a pre-configured backend sshd with its own key.

Since this runs on a separate port (I picked tcp/2222), I needed to add this to the SeLinux managed ports, and then write a custom policy for it.

$ sudo semanage port -a -t ssh_port_t -p tcp 2222

block sshpiper

(blockinherit container)

(allow process user_tmp_t ( sock_file ( write )))

(allow process container_runtime_t ( unix_stream_socket ( connectto )))

(allow process ssh_port_t ( tcp_socket ( name_bind )))

(allow process port_type ( tcp_socket ( name_connect )))

(allow process node_t ( tcp_socket ( node_bind )))

(allow process self ( tcp_socket ( listen )))

)

This policy was loaded with the command

$ sudo semodule -i sshpiper.cil /usr/share/udica/templates/base_container.cil

Inner layer (hosting)

The Nginx, php-fpm and openssh containers would be mounting the same set of volumes, allowing an end-user to ssh into the openssh container and update the files where needed. I also would mount the database and php-fpm sockets between the containers to minimize the use of network connectivity and possibilities for abuse - even through it’s all on my own private box.

Database management (PgAdmin and phpMyAdmin)

I wanted to offer pgadmin and phpmyadmin on a subdirectory of the domain, with basicauth in place. The latter is easy, the former less so, because the path that you add is passed on to the destination.

To fix this, I added this to the dynamic config:

http:

middlewares:

dbadmin-strip-prefix:

stripPrefix:

prefixes:

- /phpmyadmin

- /pgadmin

and used the following labels on the container:

# Match on FQDN & directory

- "traefik.http.routers.unique_hosting_name_dbadmin.rule=Host(`${FQDN}`) && PathPrefix(`/phpmyadmin`)"

# Apply basic auth on the url (see the documentation on how to generate the user/pass)

- "traefik.http.middlewares.unique_hosting_name_dbadmin_auth.basicauth.users=user:pass"

# strip /phpmyadmin of the url

- "traefik.http.routers.unique_hosting_name_dbadmin.middlewares=dbadmin-strip-prefix@file,unique_hosting_name_dbadmin_auth"

For phpMyAdmin I bumped into the problem that the pmadb, where it will store configuration settings and some caches, didn’t exist. I created it using an initdb script, which is launched at mariadb container initialisation time.

You can put these scripts in a directory and mount it under /docker-entrypoint-initdb.d. More info can be found on the mariadb dockerhub page, under “Initializing the database contents”.

OpenSSH

By default the linuxserver.io openssh container starts off as root, later switching to the user-id and group-id specified. This created some additional hurdles, as I always want the files to be owned by the hosting owner. My solution was to add a custom script that would make sure the mounted hosting directory had setgid (set group id) on it.

Musings after transferring a few sites…

-

My initial attempts on doing all this with

podman-composeweren’t successful. The documentation isn’t clear, I ran into more than one problem regarding variable interpolation (some would be interpolated, others not), and other things which are somewhat supported but not really. In the end I gave up, and switched everything over to using quadlets. This worked without issues. -

Even though the quadlet setup works, it is non-trivial to setup. I created a few scripts to help with this, but… not great.

-

The architecture I created above works, but it feels overly complex, and hard to maintain. There are a lot of pieces to juggle and keep up to date: 5 containers per hosting, 2 on the edge layer, and if I wanted to host additional (already containerised) workloads it’d mean having yet another set of containers to work with.

To say the least, I am not sold on maintaining this… and will investigate another route. For the interested parties, I’ve uploaded my template for Mariadb to Codeberg.

Leave a comment